Facebook AI Research ha anunciado TransCoder , un sistema que utiliza el aprendizaje profundo sin supervisión para convertir el código de un lenguaje de programación a otro.

TransCoder recibió capacitación en más de 2.8 millones de proyectos de código abierto y supera los sistemas de traducción de código existentes que utilizan métodos basados en reglas.

El equipo describió el sistema en un artículo publicado en arXiv. TransCoder está inspirado en otros sistemas de traducción automática neuronal (NMT) que utilizan el aprendizaje profundo para traducir texto de un idioma natural a otro y está capacitado solo en datos de fuentes monolingües.

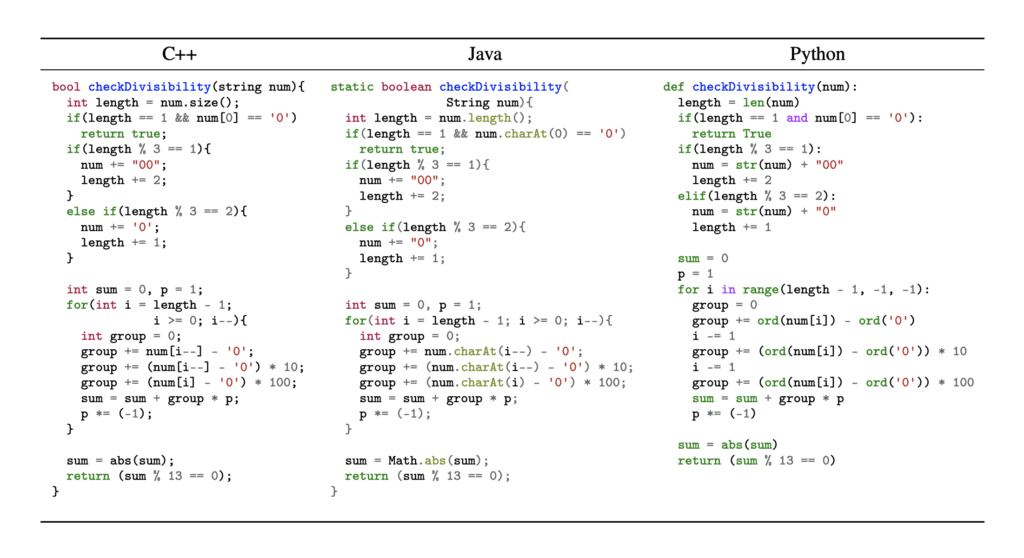

Para comparar el rendimiento del modelo, el equipo de Facebook recopiló un conjunto de validación de 852 funciones y pruebas unitarias asociadas en cada uno de los idiomas de destino del sistema: Java, Python y C ++.

En comparación con los sistemas existentes, TransCoder se desempeñó mejor en este conjunto de validación que las soluciones comerciales existentes: hasta 33 puntos porcentuales en comparación con j2py , un traductor de Java a Python.

Aunque el equipo restringió su trabajo a solo esos tres idiomas, afirman que “puede extenderse fácilmente a la mayoría de los lenguajes de programación”.

Las herramientas automatizadas para traducir el código fuente de un idioma a otro, también conocidas como compiladores de origen a origen, transcompiladores o transpiladores, existen desde la década de 1970 .

La mayoría de estas herramientas funcionan de manera similar a un compilador de código estándar: analizan el código fuente en un árbol de sintaxis abstracta (AST).

El AST se convierte de nuevo en código fuente en un idioma diferente, generalmente aplicando reglas de reescritura.

Los transpiladores son útiles en varios escenarios. Por ejemplo, algunos lenguajes, como CoffeeScript y TypeScript, están diseñados intencionalmente para usar un transpiler para convertir de un lenguaje más amigable para el desarrollador a uno más compatible.

A veces es útil transpilar bases de código completas de lenguajes de origen que están obsoletos o en desuso; por ejemplo, la herramienta de transpilador 2to3 utilizada para portar código Python desde la versión 2 obsoleta a la versión 3.

Sin embargo, los transpiladores están lejos de ser perfectos , y crear uno requiere un esfuerzo de desarrollo significativo (y a menudo personalización ).

TransCoder se basa en los avances en el procesamiento del lenguaje natural (PNL), en particular NMT sin supervisión. El modelo utiliza una arquitectura secuencia a secuencia basada en transformador que consiste en un codificador y decodificador basados en la atención.

Dado que obtener un conjunto de datos para el aprendizaje supervisado sería difícil — requeriría muchos pares de muestras de código equivalentes tanto en el idioma de origen como en el de destino — el equipo optó por utilizar conjuntos de datos monolingües para realizar un aprendizaje sin supervisión, utilizando tres estrategias.

Primero, el modelo está entrenado en secuencias de entrada que tienen enmascarados tokens aleatorios ; el modelo debe aprender a predecir el valor correcto para los tokens enmascarados.

Luego, el modelo se entrena en secuencias que han sido corrompidas enmascarando, barajando o eliminando fichas al azar; el modelo debe aprender a generar la secuencia corregida.

Finalmente, dos versiones de estos modelos se entrenan en paralelo para hacer una traducción inversa ; un modelo aprende a traducir de la fuente al idioma de destino, y el otro aprende a traducir de nuevo a la fuente.

Fuente de la imagen: https://arxiv.org/abs/2006.03511

Para entrenar a los modelos, el equipo extrajo muestras de más de 2.8 millones de repositorios de código abierto de GitHub .

A partir de eso, seleccionaron los archivos en sus lenguajes de elección (Java, C ++ y Python) y extrajeron funciones individuales. Eligieron trabajar en el nivel de función por dos razones: las definiciones de función son lo suficientemente pequeñas como para contenerlas en un único lote de entrada de capacitación, y las funciones de traducción permiten evaluar el modelo utilizando pruebas unitarias.

Aunque muchos sistemas de PNL usan el BLEUPara evaluar sus resultados de traducción, los investigadores de Facebook señalan que esta métrica puede ser una mala opción para evaluar los transpiladores: los resultados que son sintácticamente similares pueden tener un puntaje BLEU alto pero “podrían conducir a resultados de compilación y cálculo muy diferentes”, mientras que los programas con Las diferentes implementaciones que producen los mismos resultados pueden tener una puntuación baja de BLEU.

Por lo tanto, el equipo eligió evaluar los resultados de su transpilador utilizando un conjunto de pruebas unitarias. Las pruebas se obtuvieron del sitio GeeksforGeeks al recopilar problemas que contenían soluciones escritas en los tres idiomas de destino; Esto dio como resultado un conjunto de 852 funciones.

El equipo comparó el rendimiento de TransCoder en este conjunto de prueba con otras dos soluciones de transpiler existentes: el convertidor j2py Java a Python y Tangible Software SolutionsC ++ – convertidor a Java. TransCoder “significativamente” superó a ambos, con un puntaje de 74.8% y 68.7% en C ++ – a Java y Java a Python respectivamente, en comparación con 61% y 38.3% para soluciones comerciales.

En una discusión sobre Reddit , un comentarista comparó esta idea con la estrategia de GraalVM de proporcionar un solo tiempo de ejecución que admite múltiples idiomas. Otro comentarista opinó:

[TransCoder] es una idea divertida, pero creo que traducir la sintaxis es la parte fácil. ¿Qué pasa con la administración de memoria, las diferencias de tiempo de ejecución, las diferencias de biblioteca, etc.?

En el documento de TransCoder, el equipo de investigación de AI de Facebook señala que tienen la intención de lanzar el “código y los modelos entrenados”, pero no lo han hecho en este momento.

Fuente: arxiv